| Table of Contents |

|---|

Overview

Below is an overview of the current discussion topics within the Trusted AI Committee. Further updates will follow as the committee work develops.

Focus of the committee is on policies, guidelines, tooling and use cases by industry

Survey and contact current open source Trusted AI related projects to join LF AI efforts

Create a badging or certification process for open source projects that meet the Trusted AI policies/guidelines defined by LF AI

Create a document that describes the basic concepts and definitions in relation to Trusted AI and also aims to standardize the vocabulary/terminology

Mail List

Please self subscribe to the mail list here at https://lists.lfai.foundation/g/trustedai-committee.

Or email trustedai-committee@lists.lfai.foundation for more information.

Participants

Initial Organizations Participating: AT&T, Amdocs, Ericsson, IBM, Orange, TechM, Tencent

Committee Chairs

Name

Region

Organization

Email Address

LF ID

Animesh Singh

North America

IBM

Souad Ouali

Europe

Orange

Jeff Cao

Asia

Tencent

Committee Participants

Name

Organization

Email Address

LF ID

Ofer Hermoni

Amdocs

oferher@gmail.com

Mazin Gilbert

ATT

Alka Roy

ATT

Mikael Anneroth

Ericsson

Alejandro Saucedo

The Institute for Ethical AI and Machine Learning

Jim Spohrer

IBM

spohrer

Maureen McElaney

IBM

Susan Malaika

IBM

sumalaika (but different email address)

Romeo Kienzler

IBM

Francois Jezequel

Orange

Nat Subramanian

Tech Mahindra

Han Xiao

Tencent

Wenjing Chu

Futurewei

chu.wenjing@gmail.com

Yassi Moghaddam

ISSIP

yassi@issip.org

Assets

- All the assets being

Sub Categories

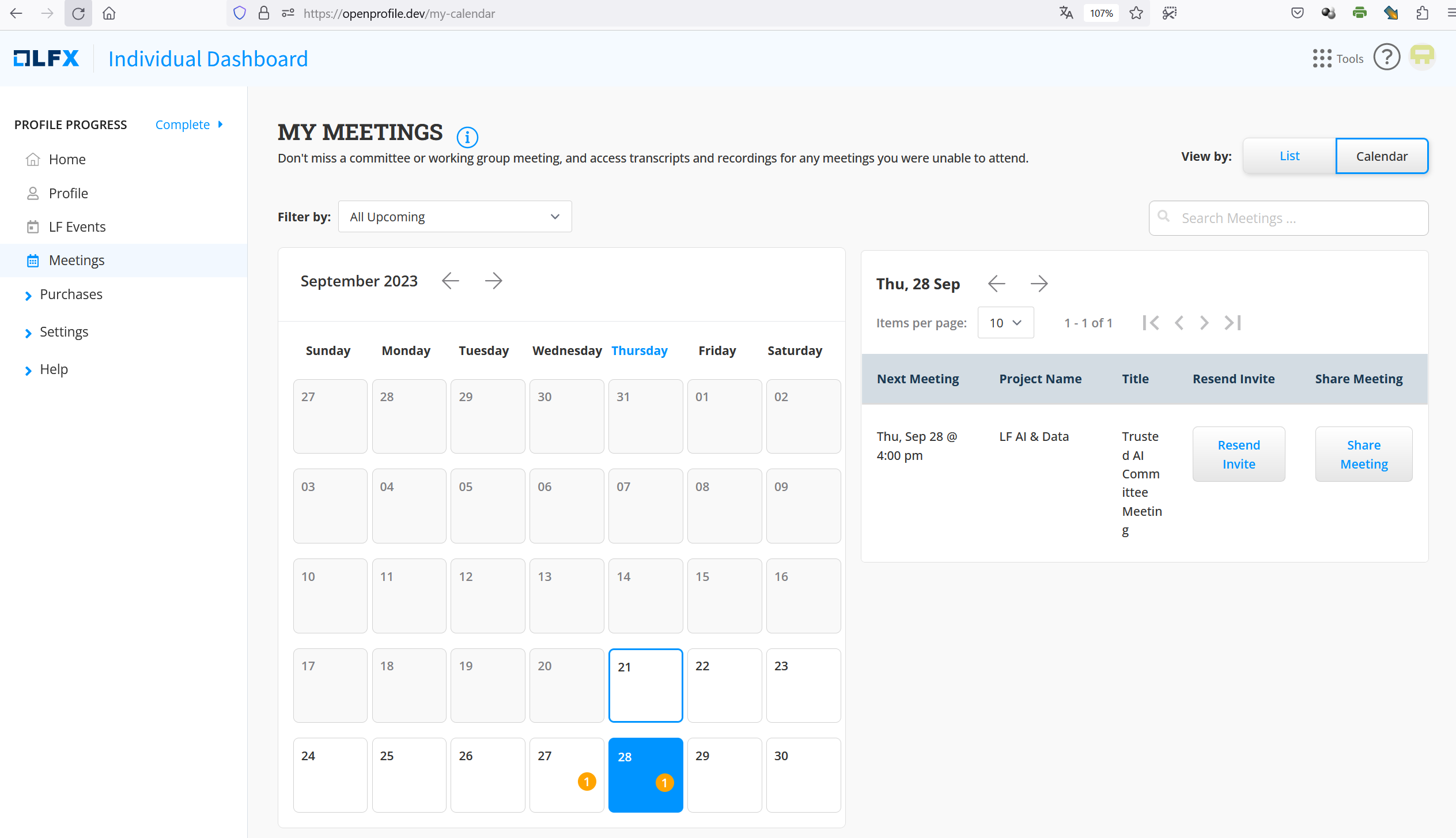

We switched to the new LFX system for meeting sheduling and recording. All previous mailing list subscribers should be listed as LFX Members of the Committee. At https://openprofile.dev/ you should be able to see all the recordings.

(Screenshot of the calender of openprofile )

Overview

Below is an overview of the current discussion topics within the Trusted AI Committee. Further updates will follow as the committee work develops.

Focus of the committee is on policies, guidelines, tooling and use cases by industry

Survey and contact current open source Trusted AI related projects to join LF AI & Data efforts

Create a badging or certification process for open source projects that meet the Trusted AI policies/guidelines defined by LF AI & Data

Create a document that describes the basic concepts and definitions in relation to Trusted AI and also aims to standardize the vocabulary/terminology

Assets

- Calendar: https://lists.lfaidata.foundation/g/trustedai-committee/calendar

- Slack: lfaifoundation.slack.com

- Mailing list: Feed (lfaidata.foundation)

- Please self subscribe to the mail list here at https://lists.lfaidata.foundation/g/trustedai-committee

- Visit the Trusted AI Committee Group Calendar to self subscribe to meetings

- Or email trustedai-committee@lists.lfaidata.foundation for more information

- Repo: Trusted-AI (github.com)

- Principles working group: https://wiki.lfai.foundation/display/DL/Principles+Working+Group

- Meeting hosts / moderators: Trusted AI Committee - Community Meetings & Calendar#,Zoom%20Meeting%20Links%20%26%20Hosts,-Please%20visit%20the

- Meeting Zoom: https://zoom-lfx.platform.linuxfoundation.org/meeting/94505370068?password=bde61b75-05ae-468f-9107-7383d8f3e449

- Other meeting notes: LFAI Trusted AI Community Meeting - Google Docs (outdated / deprecated, new meeting notes are published here)

Meetings

| Team Calendars | ||

|---|---|---|

|

Trusted AI Committee Monthly Meeting - 4th Thursday of the month (additional meetings as needed)

- 10 AM ET USA (reference time, for all other meetings the time conversion has to be checked for daylight savings)

- 10 PM Shenzen China

- 7:30 PM India

- 4 PM Paris

- 7 AM PT USA (updated for daylight savings time as needed)

Zoom channel :

Committee Chairs

Name | Region | Organization | Email Address | LF ID | |

|---|---|---|---|---|---|

Andreas Fehlner | Europe | ONNX | https://www.linkedin.com/in/andreas-fehlner-60499971 | ||

Susan Malaika | America | IBM | Susan Malaika | https://www.linkedin.com/in/susanmalaika | |

Suparna Bhattacharya | Asia | HPE | suparna.bhattacharya@hpe.com | Suparna Bhattacharya | https://www.linkedin.com/in/suparna-bhattacharya-5a7798b |

Adrian Gonzalez Sanchez | Europe | HEC Montreal / Microsoft / OdiseIA | Adrian Gonzalez Sanchez(but different email address) | https://www.linkedin.com/in/adriangs86 |

Participants

Initial Organizations Participating: IBM, Orange, AT&T, Amdocs, Ericsson, TechM, Tencent

Name | Organization | Email Address | LF ID |

|---|---|---|---|

Ofer Hermoni | PieEye | Ofer Hermoni | |

Mazin Gilbert | ATT | ... | |

Alka Roy | Responsible Innovation Project | ... | |

Mikael Anneroth | Ericsson | ... | |

Alejandro Saucedo | The Institute for Ethical AI and Machine Learning | Alejandro Saucedo | |

Jim Spohrer | Retired IBM, ISSIP.org | ||

Saishruthi Swaminathan | IBM | Saishruthi Swaminathan | |

Susan Malaika | IBM | sumalaika (but different email address) | |

Romeo Kienzler | IBM | Romeo Kienzler | |

Francois Jezequel | Orange | Francois Jezequel | |

Nat Subramanian | Tech Mahindra | Natarajan Subramanian | |

Han Xiao | Tencent | ... | |

Wenjing Chu | Futurewei | ||

Yassi Moghaddam | ISSIP | Yassi Moghaddam | |

| Animesh Singh | IBM | singhan@us.ibm.com | Animesh Singh |

| Souad Ouali | Orange | souad.ouali@orange.com | Souad Ouali |

| Jeff Cao | Tencent | jeffcao@tencent.com | ... |

| Ron Doyle | Broadcom | ron.doyle@broadcom.com |

Sub Categories

- Fairness: Methods to detect and mitigate bias in datasets and models, including bias against known protected populations

- Robustness: Methods to detect alterations/tampering with datasets and models, including alterations from known adversarial attacks

- Explainability: Methods to enhance understandability/interpretability by persona/roles in process of AI model outcomes/decision recommendations, including ranking and debating results/decision options

- Lineage: Methods to ensure provenance of datasets and AI models, including reproducibility of generated datasets and AI models

Projects

Name | Github | Website |

|---|---|---|

AI Fairness 360 | ||

Adversarial Robustness 360 | ||

AI Explainability 360 |

Meeting Content (minutes / recording / slides / other)

- Robustness: Methods to detect alterations/tampering with datasets and models, including alterations from known adversarial attacks

- Explainability: Methods to enhance understandability/interpretability by persona/roles in process of AI model outcomes/decision recommendations, including ranking and debating results/decision options

- Lineage: Methods to ensure provenance of datasets and AI models, including reproducibility of generated datasets and AI models

Projects

Name

Github

Website

AI Fairness 360

Adversarial Robustness 360

AI Explainability 360

Working Groups

Trusted AI Principles Working Group

Trusted AI Technical Working Group

Meetings

Zoom info : Trusted AI Committee meeting - Alternate Thursday's, 10 PM Shenzen China, 4 PM Paris, 10 AM ET, 7 AM PT USA (updated for daylight savings time as needed)

https://zoom.us/j/7659717866

How to Join: Visit the Trusted AI Committee Group Calendar to self subscribe to meetings.

Or email trustedai-committee@lists.lfai.foundation for more information.

Meeting Content (minutes / recording / slides / other):

Date

Agenda/Minutes

Agenda

Date | Agenda/Minutes | ||||||

|---|---|---|---|---|---|---|---|

| Thu, Sep 28 @ 4:00 pm | Recording could be also accessed by http://openprofile.dev | ||||||

| Trusted AI Committee | |||||||

| * Preparing for September 7 TAC session

| ||||||

| Friday August 11, at 10am US Eastern Working Session to prepare for the TAC Trusted AI Committee presentation on Thursday September 7 at 9am US Eastern | ||||||

|

Trusted_AI_Committee_2023_07_27_Handout_ONNX_Nocker.pdf TrustedAI_20230727.mp4 -Topics included CMF Developments with ONNX and Homomorphically Encrypted Machine learning with ONNX models | ||||||

| CMF and AI Explainability led by Suparna Bhattacharya and Vijay Arya - with MaryAnn, Gabe and Soumi Das

| ||||||

| MarkTechPost, Jean-marc Mommessin Active and Continuous Learning for Trusted AI, Martin Foltin AI Explainability, Vijay Arya | ||||||

|

Recording (video) Zoom Recording (video) confluence | ||||||

| Part 0 - Metadata / Lineage / Provenance topic from Suparna Bhattacharya & Aalap Tripathy & Ann Mary Roy & Professor Soranghsu Bhattacharya & Team Part 1 - Open Voice Network - Introductions https://openvoicenetwork.org Part 2 - Identify small steps/publications to motivate concrete actions over 2023 in the context of these pillars: Technology | Education | Regulations | Shifting power : Librarians / Ontologies / Tools Possible Publications / Blogs

Part 5 - Any Other Business Recording (video)

| ||||||

| Join the Trusted AI Committee at the LF-AI for the upcoming session on April 27 at 10am Eastern where you will hear from:

------------------------------------------------------------------------------------ We all have prework to do! Please listen to these videos:

Recording (video) Recording (audio) | ||||||

| Proposed agenda (ET)

Call Lead: Susan Malaika | ||||||

| Invitees: Beat Buesser; Phaedra Boinodiris ; Alexy Khrabov, David Radley, Adrian Gonzalez Sanchez Optional: Ofer Hermoni , Nancy Rausch, Alejandro Saucedo, Sri Krishnamurthy, Andreas Fehlner, Suparna Bhattacharya Attendees: Beat Buesser, Phaedra Boinodiris, Alexy Khrabov, Adrian Gonzalez Sanchez, Ofer Hermoni, Andreas Fehlne Discussion

Next steps

| ||||||

| malaika@us.ibm.com has scheduled a call on Monday October 31, 2022 to determine next steps for the committee due to a change in leadership- please connect with Susan if you would like to be added to the call The group met once a month - on the third Thursday each month at 10am US Eastern. See notes below for prior calls . Activities of the committee included:

Reporting to:

Questions:

Invitees and interested parties on the call on October 31, 2022

| ||||||

| |||||||

| |||||||

Walkthrough of LFAI Trusted AI Website and github location of projects Trusted AI Video Series Trusted AI Course in collaboration with University of Pennsylvania | |||||||

Z-Inspection: A holistic and analytic process to assess Ethical AI - Roberto Zicari - University of Frankfurt, Germany Age-At-Home - the exemplar of TrustedAI, David Martin, Hacker in Charge at motion-ai.com | |||||||

Plotly Demo with SHAP and AIX360 - Xing Han, Plot.ly

Swiss Digital Trust Label - short summary - Romeo Kienzler, IBM

| |||||||

Watson OpenScale and Trusted AI - Eric Martens, IBM LFAI Ethics Training course Recording:

Slack: https://lfaifoundation.slack.com/archives/CPS6Q1E8G/p1595515808086900 | |||||||

| |||||||

| |||||||

|

Agenda

|

|

| |

Proposed Agenda

|

|

|

| |

Discuss AIF360 work around SKLearn community (Samuel Hoffman, IBM Research demo) Discuss "Many organizations have principles documents, and a bit of backlash - for not enough practical examples."

Notes from the call: |

Proposed Agenda

Notes from the call: |

https://github.com/lfai/trusted-ai/blob/master/committee-meeting-notes/notes-20191212.md | |

Proposed Agenda:

Notes from call: |

https://github.com/lfai/trusted-ai/blob/master/committee-meeting-notes/notes-20191114.md | |

Attendees: Ofer, Alka, Francois, Nat, Han, Animesh, Jim, Maureen, Susan, Alejandro Summary

Detail

Notes from the call: |

https://github.com/lfai/trusted-ai/blob/master/committee-meeting-notes/notes-20191017.md | |

Attendees: Animesh Singh (IBM), Maureen McElaney (IBM), Han Xiao (Tencent), Alejandro Saucedo, Mikael Anneroth (Eriksson), Ofer Hermoni (Amdocs) Animesh will check with Souad Ouali to ensure Orange wants to lead the Principles working group and host regular meetings. Committee members on the call were not included in the email chains that occurred so we need to confirm who is in charge and how communication will occur. The Technical working group has made progress but nothing concrete to report. A possible third working group could form around AI Standards. Notes from the call: |

https://github.com/lfai/trusted-ai/blob/master/committee-meeting-notes/notes-20191003.md | |

| Attendees: |

Ibrahim. H, Nat .S, Animesh.S, Alka.R, Jim.S, Francios. J, Jeff. C, Maureen. M, Mikael. A, Ofer. H, Romeo.K

Working Group Names and Leads have been confirmed:

|

Possible Discussion about third working group Discussion about LFAI day in Paris More next steps Will begin recording meetings in future calls. Notes from call: https://github.com/lfai/trusted-ai/blob/master/committee-meeting-notes/notes-20190919.md |