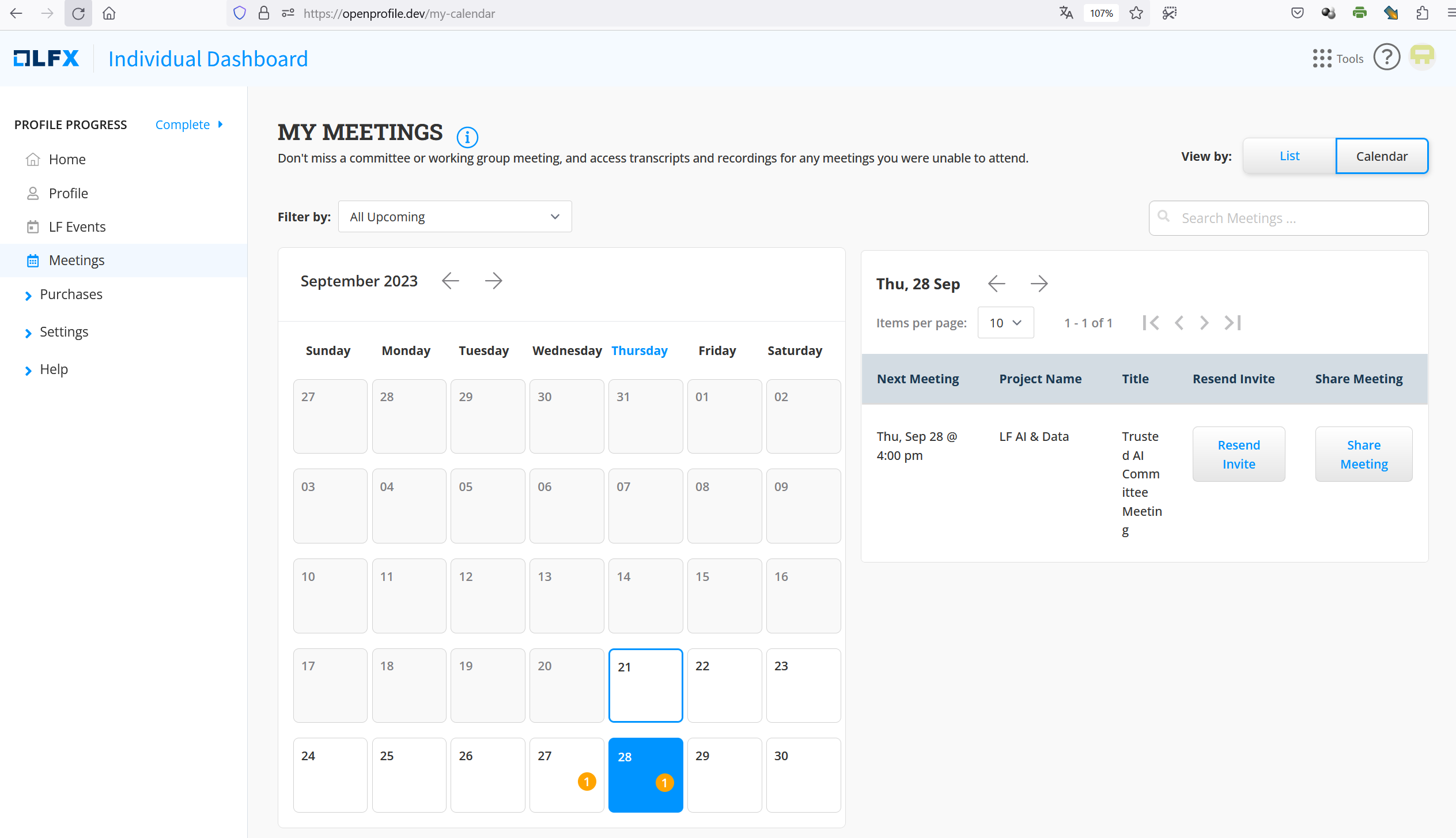

We switched to the new LFX system for meeting sheduling and recording. All previous mailing list subscribers should be listed as LFX Members of the Committee. At https://openprofile.dev/ you should be able to see all the recordings.

(Screenshot of the calender of openprofile )

Overview

Below is an overview of the current discussion topics within the Trusted AI Committee. Further updates will follow as the committee work develops.

Focus of the committee is on policies, guidelines, tooling and use cases by industry

Survey and contact current open source Trusted AI related projects to join LF AI & Data efforts

Create a badging or certification process for open source projects that meet the Trusted AI policies/guidelines defined by LF AI & Data

Create a document that describes the basic concepts and definitions in relation to Trusted AI and also aims to standardize the vocabulary/terminology

Assets

- Calendar: https://lists.lfaidata.foundation/g/trustedai-committee/calendar

- Slack: lfaifoundation.slack.com

- Mailing list: Feed (lfaidata.foundation)

- Please self subscribe to the mail list here at https://lists.lfaidata.foundation/g/trustedai-committee

- Visit the Trusted AI Committee Group Calendar to self subscribe to meetings

- Or email trustedai-committee@lists.lfaidata.foundation for more information

- Repo: Trusted-AI (github.com)

- Principles working group: https://wiki.lfai.foundation/display/DL/Principles+Working+Group

- Meeting hosts / moderators: Trusted AI Committee - Community Meetings & Calendar#,Zoom%20Meeting%20Links%20%26%20Hosts,-Please%20visit%20the

- Meeting Zoom: https://zoom-lfx.platform.linuxfoundation.org/meeting/94505370068?password=bde61b75-05ae-468f-9107-7383d8f3e449

- Other meeting notes: LFAI Trusted AI Community Meeting - Google Docs (outdated / deprecated, new meeting notes are published here)

Meetings

- EDIT THE CALENDAR

Customise the different types of events you'd like to manage in this calendar.

#legIndex/#totalLegs - RESTRICT THE CALENDAR

Optionally, restrict who can view or add events to the team calendar.

#legIndex/#totalLegs - SHARE WITH YOUR TEAM

Grab the calendar's URL and email it to your team, or paste it on a page to embed the calendar.

#legIndex/#totalLegs - ADD AN EVENT

The calendar is ready to go! Click any day on the calendar to add an event or use the Add event button.

#legIndex/#totalLegs - SUBSCRIBE

Subscribe to calendars using your favourite calendar client.

#legIndex/#totalLegs

Trusted AI Committee Monthly Meeting - 4th Thursday of the month (additional meetings as needed)

- 10 AM ET USA (reference time, for all other meetings the time conversion has to be checked for daylight savings)

- 10 PM Shenzen China

- 7:30 PM India

- 4 PM Paris

- 7 AM PT USA (updated for daylight savings time as needed)

Zoom channel :

Committee Chairs

Name | Region | Organization | Email Address | LF ID | |

|---|---|---|---|---|---|

Andreas Fehlner | Europe | ONNX | https://www.linkedin.com/in/andreas-fehlner-60499971 | ||

Susan Malaika | America | IBM | Susan Malaika | https://www.linkedin.com/in/susanmalaika | |

Suparna Bhattacharya | Asia | HPE | suparna.bhattacharya@hpe.com | Suparna Bhattacharya | https://www.linkedin.com/in/suparna-bhattacharya-5a7798b |

Adrian Gonzalez Sanchez | Europe | HEC Montreal / Microsoft / OdiseIA | Adrian Gonzalez Sanchez(but different email address) | https://www.linkedin.com/in/adriangs86 |

Participants

Initial Organizations Participating: IBM, Orange, AT&T, Amdocs, Ericsson, TechM, Tencent

Name | Organization | Email Address | LF ID |

|---|---|---|---|

Ofer Hermoni | PieEye | Ofer Hermoni | |

Mazin Gilbert | ATT | ... | |

Alka Roy | Responsible Innovation Project | ... | |

Mikael Anneroth | Ericsson | ... | |

Alejandro Saucedo | The Institute for Ethical AI and Machine Learning | Alejandro Saucedo | |

Jim Spohrer | Retired IBM, ISSIP.org | ||

Saishruthi Swaminathan | IBM | Saishruthi Swaminathan | |

Susan Malaika | IBM | sumalaika (but different email address) | |

Romeo Kienzler | IBM | Romeo Kienzler | |

Francois Jezequel | Orange | Francois Jezequel | |

Nat Subramanian | Tech Mahindra | Natarajan Subramanian | |

Han Xiao | Tencent | ... | |

Wenjing Chu | Futurewei | ||

Yassi Moghaddam | ISSIP | Yassi Moghaddam | |

| Animesh Singh | IBM | singhan@us.ibm.com | Animesh Singh |

| Souad Ouali | Orange | souad.ouali@orange.com | Souad Ouali |

| Jeff Cao | Tencent | jeffcao@tencent.com | ... |

| Ron Doyle | Broadcom | ron.doyle@broadcom.com |

Sub Categories

- Fairness: Methods to detect and mitigate bias in datasets and models, including bias against known protected populations

- Robustness: Methods to detect alterations/tampering with datasets and models, including alterations from known adversarial attacks

- Explainability: Methods to enhance understandability/interpretability by persona/roles in process of AI model outcomes/decision recommendations, including ranking and debating results/decision options

- Lineage: Methods to ensure provenance of datasets and AI models, including reproducibility of generated datasets and AI models

Projects

Name | Github | Website |

|---|---|---|

AI Fairness 360 | ||

Adversarial Robustness 360 | ||

AI Explainability 360 |

Meeting Content (minutes / recording / slides / other)

Date | Agenda/Minutes |

|---|---|

| Thu, Sep 28 @ 4:00 pm | Recording could be also accessed by http://openprofile.dev |

| Trusted AI Committee | |

| * Preparing for September 7 TAC session |

| Friday August 11, at 10am US Eastern Working Session to prepare for the TAC Trusted AI Committee presentation on Thursday September 7 at 9am US Eastern |

|

Trusted_AI_Committee_2023_07_27_Handout_ONNX_Nocker.pdf TrustedAI_20230727.mp4 -Topics included CMF Developments with ONNX and Homomorphically Encrypted Machine learning with ONNX models |

| CMF and AI Explainability led by Suparna Bhattacharya and Vijay Arya - with MaryAnn, Gabe and Soumi Das |

| MarkTechPost, Jean-marc Mommessin Active and Continuous Learning for Trusted AI, Martin Foltin AI Explainability, Vijay Arya |

|

Recording (video) Zoom Recording (video) confluence |

| Part 0 - Metadata / Lineage / Provenance topic from Suparna Bhattacharya & Aalap Tripathy & Ann Mary Roy & Professor Soranghsu Bhattacharya & Team Part 1 - Open Voice Network - Introductions https://openvoicenetwork.org Part 2 - Identify small steps/publications to motivate concrete actions over 2023 in the context of these pillars: Technology | Education | Regulations | Shifting power : Librarians / Ontologies / Tools Possible Publications / Blogs

Part 5 - Any Other Business Recording (video) |

| Join the Trusted AI Committee at the LF-AI for the upcoming session on April 27 at 10am Eastern where you will hear from:

------------------------------------------------------------------------------------ We all have prework to do! Please listen to these videos:

Recording (video) Recording (audio) |

| Proposed agenda (ET)

Call Lead: Susan Malaika |

| Invitees: Beat Buesser; Phaedra Boinodiris ; Alexy Khrabov, David Radley, Adrian Gonzalez Sanchez Optional: Ofer Hermoni , Nancy Rausch, Alejandro Saucedo, Sri Krishnamurthy, Andreas Fehlner, Suparna Bhattacharya Attendees: Beat Buesser, Phaedra Boinodiris, Alexy Khrabov, Adrian Gonzalez Sanchez, Ofer Hermoni, Andreas Fehlne Discussion

Next steps

|

| malaika@us.ibm.com has scheduled a call on Monday October 31, 2022 to determine next steps for the committee due to a change in leadership- please connect with Susan if you would like to be added to the call The group met once a month - on the third Thursday each month at 10am US Eastern. See notes below for prior calls . Activities of the committee included:

Reporting to:

Questions:

Invitees and interested parties on the call on October 31, 2022

|

| |

| |

Walkthrough of LFAI Trusted AI Website and github location of projects Trusted AI Video Series Trusted AI Course in collaboration with University of Pennsylvania | |

Z-Inspection: A holistic and analytic process to assess Ethical AI - Roberto Zicari - University of Frankfurt, Germany Age-At-Home - the exemplar of TrustedAI, David Martin, Hacker in Charge at motion-ai.com | |

Plotly Demo with SHAP and AIX360 - Xing Han, Plot.ly

Swiss Digital Trust Label - short summary - Romeo Kienzler, IBM

| |

Watson OpenScale and Trusted AI - Eric Martens, IBM LFAI Ethics Training course Recording: Slack: https://lfaifoundation.slack.com/archives/CPS6Q1E8G/p1595515808086900 | |

| |

| |

| |

| |

| |

| |

| |

Discuss AIF360 work around SKLearn community (Samuel Hoffman, IBM Research demo) Discuss "Many organizations have principles documents, and a bit of backlash - for not enough practical examples."

Notes from the call: https://github.com/lfai/trusted-ai/blob/master/committee-meeting-notes/notes-20200123.md | |

Proposed Agenda

Notes from the call: https://github.com/lfai/trusted-ai/blob/master/committee-meeting-notes/notes-20191212.md | |

Proposed Agenda:

Notes from call: https://github.com/lfai/trusted-ai/blob/master/committee-meeting-notes/notes-20191114.md | |

Attendees: Ofer, Alka, Francois, Nat, Han, Animesh, Jim, Maureen, Susan, Alejandro Summary

Detail

Notes from the call: https://github.com/lfai/trusted-ai/blob/master/committee-meeting-notes/notes-20191017.md | |

Attendees: Animesh Singh (IBM), Maureen McElaney (IBM), Han Xiao (Tencent), Alejandro Saucedo, Mikael Anneroth (Eriksson), Ofer Hermoni (Amdocs) Animesh will check with Souad Ouali to ensure Orange wants to lead the Principles working group and host regular meetings. Committee members on the call were not included in the email chains that occurred so we need to confirm who is in charge and how communication will occur. The Technical working group has made progress but nothing concrete to report. A possible third working group could form around AI Standards. Notes from the call: https://github.com/lfai/trusted-ai/blob/master/committee-meeting-notes/notes-20191003.md | |

| Attendees: Ibrahim. H, Nat .S, Animesh.S, Alka.R, Jim.S, Francios. J, Jeff. C, Maureen. M, Mikael. A, Ofer. H, Romeo.K

Working Group Names and Leads have been confirmed:

Possible Discussion about third working group Discussion about LFAI day in Paris More next steps Will begin recording meetings in future calls. Notes from call: https://github.com/lfai/trusted-ai/blob/master/committee-meeting-notes/notes-20190919.md |

6 Comments

Jim Spohrer

Here are some additional notes taken by Jim Spohrer (IBM) <spohrer@us.ibm.com> - https://docs.google.com/document/d/1QJ3t0YD3mzOa-gajjbHtgjXEO7_LPY1gmRW1SHcoNo4/edit

Jim Spohrer

This document: https://docs.google.com/document/d/1QJ3t0YD3mzOa-gajjbHtgjXEO7_LPY1gmRW1SHcoNo4/edit?usp=sharing

LF AI - Trusted AI Committee Call - Every other week

(may change)

Ibrahim Haddad (LF): I will set up "Trusted AI" Zoom to record future calls

Ibrahim: Attendees should go to Wiki for agenda: https://wiki.lfai.foundation/display/DL/LF+AI+Trusted+AI+Committee

Jim Spohrer (IBM): We should rename ourselves when we join zoom - so people can easily see your company - I just did that (a good point to add to meeting start when we review the agenda)

Nat Subramanian (Tech Mahindra): Wiki todo: Need a section of wiki to highlight guest speakers

Ibrahim: Zoom todo: will set up a zoom for Trusted AI - and in future co-chairs will be able to record

Animesh Singh (IBM): Showed a Google Doc with agenda - need to get link

Animesh: Two working groups (WG) will have lead, core members, optional members and meet separately

Animesh: WG Principles and WG Technical

Animesh: WG Techincal focus - landscape projects in Trusted AI area, and specifically those that are coming into LF AI to support Trusted AI work

Animesh: Which OS projects will come into LF AI and which are being integrated into LF AI projects

Animesh: Face-to-face meetings for WG will need to be planned by WG leads

Animesh: WG should have outcome and timetable

Animesh: sharing a Google Doc with the above information

Maureen McElaney (IBM): I’ve added a loose agenda for today’s call to the wiki. Since I’m on mobile can someone take on the responsibility of adding any further notes there?

Jim Spohrer (IBM): I am taking notes and can clean them up and upload them, link from Wiki to notes

Animesh: Introducing Romeo Kienzler (IBM Switzerland) who added LF AI meeting in Paris hosted by Orange

Animesh: Romeo has created top data sciences and AI Coursera courses

Alka Roy (AT&T Innovation Center) - 7:15 AM PT - Had trouble with new link - just joining.

Romeo Kienzler (IBM Switzerland): Working on pipelines for technical working group

Animesh: Can we use Slack to keep an ongoing work cadence ?

Nat: Not slack because of licensing complications. ICRC is being used instead. Communication channel gap.

Nat: Acumos has SCRUM meetings almost everyday

Nat: Acumos meetings - we can re-use time slots and leverage for Trusted AI WG.

Nat: Tencent Angel team interest in on boarding a model

Nat: two working groups - Principles and Technical

Nat: Synergies between Acumos + Angel + AIF360

Nat: Principles works on policies and strategies

Alka Roy (AT&T Innovation Center) - 7:30 AM PT: Good morning!

Alka: How to get to a set of criteria and guidelines?

Animesh: job of WG Principles

Alka: Principles team creates, and technical team provides feedback/adopts

Animesh: yes, criteria evolves with feedback from terchnical

Nat Subramanian (Tech Mahindra):: Romeo is IBM in Switzerland?

Nat: China has most difficulty joining with times we have proposed so far (early California)

Animesh: Romeo’s time might be better for China and India meetings

Animesh: Let’s identify right subset of people, and let’ them find a good weekly time for them; Since Nat knows the folks - he can best find a way to loop in Romeo. I am OK with calls after 10pm PT

Nat: I will get a time for Technical WG

Animesh: I will add Romeo email to WIki - his email is romeo.kienzler@ch.ibm.com

Francois Jezequel (Orange) - 7:32 AM PT: Suoad is willing to continue, just not available today

Animesh: Souad

Francois: “Sue-wad” rhymes with “Squad”

Alka: IEEE has good work we can borrow from

Alka: how to apply principles, practical technical and principle WG interactions

Alka: going beyond theory into practice will be helpful

Animesh: Required people and optional people

Animesh: Lineage -> Certification -> Badging

Animesh: Can help connect with IBM Research and ATT and rest of Principles committee

Animesh: Factsheets project is relevant - Factsheets: Increasing Trust in AI Services Through Supplier Documents of Conformity - Certifications - https://arxiv.org/pdf/1808.07261.pdf https://www.ibm.com/blogs/research/2018/08/factsheets-ai/

Alka: Please add me to the Principles (optional), and I will find the right ATT required

Animesh: Alka is Bay Area for a visit sometime to discuss

Alka: Rueben (AT&T)

Alka: I will make sure AT&T is engaged

Nat: I have met with Rueben in NJ

Jeff Cao (Tencent) - 7:44 AM PT: I have ideas for person on technical person - Fitz Wang (Tencent)

Nat: I will reach out to Fitz, and please share name of others

Mikael Anneroth (Ericsson) - 7:45 AM PT: please add Ericsson to Principles work group - would like to share and discuss - I am in Stockholm Sweden

Jeff Cao (Tencent): Han Xiao is on the list

Animesh: Susan Malaika (IBM) is also interested)

Animesh: If Orange will drive Principles

Jeff Cao (Tencent): Tencent can join the principles group

Francois Jezequel (Orange): Yes, Souad is interested in leading Principles WG

Alka: identify gaps since a lot of work has already bee done

Francois: Minimum intersection that can be operationalized (how to make it practical in operation)

Alka: Please let’s make the goal in writing, and when to have meeting to share each perspective, consolidate, and agree

Animesh: Francois or Souad can send a kick-off email, and we can get each group add their members (like IBM include Susan, Maureen, Jim, etc.

Animesh: Shared several Google doc links and will upload them to Wiki

Alka: Strategy meeting - f2f being planned - in October - by that time converged on principles - progress to report

Ofer: End of October, OSS Oct 31, full day governing board meeting

Nat: details readout in Oct 31

Alka: good deadline

Alka: Trusted and Responsible AI added to mission for LF AI - any objections

All: No objections noted

Jim Spohrer

Working Group Names and Leads:

Principles, lead: Souad Ouali (Orange France) with members from Orange, AT&T, Tech Mahindra, Tencent, IBM, Ericsson, Amdocs.

Technical, lead: Romeo Kienzler (IBM Switzerland) with members from IBM, AT&T, Tech Mahindra, Tencent, Ericsson, Amdocs, Orange.

Working groups will have a weekly meeting to make progress. First read out to LF AI governing board will be Oct 31 in Lyon France.

The Principles team will study the existing material from companies, governments, and professional associations (IEEE), and some up with set that can be shared with the technical team for feedback as a first step. We need to identify and compile the existing materials.

The Technical team is working on Acuomos+Angel+AIF360 integration demonstration.

Jim Spohrer

Thanks Souad for your note below to committee members - just re-reading it...

(1) Great to keep a global balance on the committee

(2) Look forward to definitions you are drafting

(3) Collecting material - see some links below.

(4) Cross analysis - Harvard study good starting point.

(5) Pragmatic approach - what real-world enterprise use cases?

(6) Open source tools - how tools and Trusted AI Workflows relate to above use cases?

(7) Watch on LFAI landscape projects - which projects have been checked against principles?

(8) How to ensure trust in open source package - how to organize audit to ensure files not corrupted? See Factsheets link below for a start.

Some useful link:

Globally Diverse - LFAI Trusted AI Committee: https://wiki.lfai.foundation/display/DL/Trusted+AI+Committee

University: Harvard AI Principles meta-analysis: 32 sets of principles side by side, with 8 themes: https://ai-hr.cyber.harvard.edu/primp-viz.htm

Company: AT&T (Tom Moore, Chief Privacy Officer): https://about.att.com/innovationblog/2019/05/our_guiding_principles.html

Company: IBM Everyday Ethics for AI: https://www.ibm.com/watson/assets/duo/pdf/everydayethics.pdf

Company: IBM NIST AI Standards response: https://www.nist.gov/sites/default/files/documents/2019/06/06/nist-ai-rfi-ibm-001.pdf

Company: IBM Factsheets: Increasing Trust in AI Services Through Supplier Documents of Conformity - Certifications - https://arxiv.org/pdf/1808.07261.pdf

Company: Tencent sent some files - but I do not have a URL to the documents.

Trusted AI group calendar: https://wiki.lfai.foundation/pages/viewpage.action?pageId=12091895 (Please subscribe)

Mailing list: https://lists.lfai.foundation/g/trustedai-committee (Please subscribe)

GitHub: https://github.com/lfai/trusted-ai/

Q: Where is the best place for us to upload documents to share with the Trusted AI Committee/Principles WG?

Jim Spohrer

Some notes from Oct 17, 2019 committee call:

Summary

- Animesh walked through the draft slides

(to be presented in Lyon to LFAI governing board about TAIC)

- Discussion of changes to make

- Discussion of members, processes, and schedules

Detail

- Jim will put slides in Google Doc and share with all participants

(https://drive.google.com/drive/folders/1RSHBzTj7SRpbioR31JP9ASEjvuV9kqnw)

- Susan is exploring a slack channel for communications

- Trust and Responsibility, Color, Icons to add Amdocs, Alejandro's Institute

From chat:

+ @Ofer to PWG

Alka: helped draft these - and acknowledges need to get everyone’s perspective - asked about feedback loop with Usecases Group

Francois: These slides will be a good resource - thank-you; now we have to organize the way we contribute to each part of the document - Suoad will lead getting consensus and finalizing this draft

Francois: Still need to define connection between principles and tooling - and make it concrete Animesh: Members of UWG? Animesh: Need members who will create use cases - software and actual industry use cases Animesh: What are the telco use cases?

Alka: personal focus on PWG definition. Will work to gather AT&T use cases and try to find the right active person

Nat: interest yes. However, current focus on the release - in 3-4 weeks from now release will be complete for Acumos. Technical people tied up on release. Nat: Reuben can give guidance, and Nat working on next level of technical experts

Han: Jeff and I working to get more awareness and attract others in Tencent; open source office and develops team to get involved. Find real world use cases

Francois: Discussion of when PWG will meet - off-line and maybe two meetings. (Alka, Francois, Jeff, Susan)

Alka: I have material to share with everyone when we can schedule that

Animesh: Alejandro has great material on GitHub.

Jim Spohrer

January 23 meeting notes:

Attendees: Animesh Singh (IBM), Samuel Hoffman (IBM), Nat (TechM), Susan Malaika (IBM), Alejandro Saucedo (Ethical AI Institute and Seldon), Maureen McElaney (IBM, Ofer Hermoni, Parag Ved, Yassi Moghaddam (ISSIP), Jim Spohrer (IBM)

Animesh: Asked Nat for Acumos update

Nat: Acumos update

Animesh: Introduced Samuel Hoffman (IBM Research) to give Trusted AI and Scikitlearn demo

Nat: Will the IBM materials be donated to LF AI? Aligned with IBM Product OpenScale?

Animesh: Contributed if LF AI pull and community adoption

Jim: Correct, product team at IBM wants to see community pull for it to be contributed (actual use and contributions from community)

Nat: Mentioned Acumos Gaya - and will send links

Ofer: Introduce Yassi yourself

Yassi: introduced herself as exec director of professional association (Jim on Board, Susan lead on AI speaker series)

Nat: gave history of the LF AI Trusted AI Committeee - companies from Asia, USA, Europe involved - two working groups, use cases and principles

Nat: constantly evolving area

Ofer: discuss it